Life of a Go Infrastructure Maintainer

I originally gave this as a talk at the Seattle Go Meetup on 2017-02-16 (video). The following is a refined version of the talk, not just a verbatim transcript, based on my speaker notes.

What do I do?

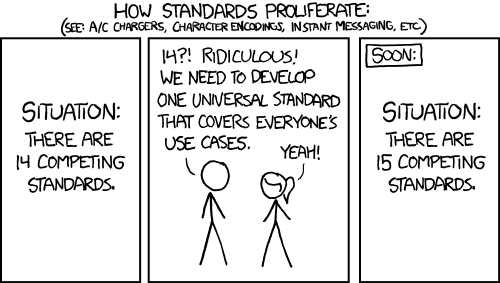

I am the Tech Lead for Go on Cloud at Google. When you hear this, you may think, “Ross creates libraries.” And that could be reasonable. Google offers a Cloud Platform for hosting and that has a whole bunch of services. You need Go libraries to be able to talk to these services. But it turns out, creating libraries is a rather infrequent occurrence. More often, a library already exists, so creating a new one would result in this scenario:

Standards from xkcd by Randall Munroe. Used under a Creative Commons License.

Clearly we don’t want 15 competing libraries.

Perhaps a better description of what I do is “maintaining libraries.” But the term maintenance traditionally implies that the software is fundamentally staying the same: bugs are fixed or functionality is added on top of a solid base. It sounds almost peaceful. But more often that not, when we throw an API into the wild, we get bug reports where our customers use libraries in ways we don’t expect. The libraries behave in ways that neither we or our users expect. The typical engineering response would be not to do that: “You’re holding it wrong!” But this is short-sighted: the better engineering response is to make it impossible to “hold” the library wrong. This makes for simpler, more robust systems, especially as we add and adapt new functionality to handle these cases.

Tension comes because you can’t break anybody’s existing applications. These applications are part of many people’s day-to-day; businesses want these fixes, but don’t want to subject themselves to churn to get them. But just about any change can break applications, even seemingly innocuous ones. Imagine the following contrived contacts library:

package contacts

type Person struct {

FirstName string

LastName string

}

func Me() *Person {

return &Person{

FirstName: "Ross",

LastName: "Light",

}

}

And an application with tests that uses it:

package app

import "contacts"

func GreatSpeaker() *Person {

return contacts.Me()

}

func TestMyName(t *testing.T) {

got := GreatSpeaker()

want := &Person{

FirstName: "Ross",

LastName: "Light",

}

if !reflect.DeepEqual(got, want) {

t.Errorf("got %+v; want %+v", got, want)

}

}

Imagine that after lots of feedback, we realize that most everybody using this contacts library wants to get the contact’s favorite number. We add a new field, which we think should be a safe change. After all, we’re merely layering new functionality, not changing existing functionality.

package contacts

type Person struct {

FirstName string

LastName string

FavoriteNumber int

}

func Me() *Person {

return &Person{

FirstName: "Ross",

LastName: "Light",

FavoriteNumber: 42,

}

}

But this breaks the test above! reflect.DeepEqual notices that the golden

input’s FavoriteNumber is zero (implicit because the composite literal does

not initialize the field), which is different from the received

FavoriteNumber.

Given that this seemingly innocuous change broke the tests, how do I as an infrastructure maintainer gain confidence that I’m not breaking anything? Automated testing. Before joining the Go Cloud team, I worked on its counterpart team of Go inside Google. Google has a really strong testing culture where its product teams write their own automated tests. HEAD is always expected to pass tests; breaking changes should be rolled back ASAP. (This has been written about extensively.) This is great, because I can just make my change in my local working copy, then run every affected test. This is harder outside Google, but the principle remains the same: if a customer has written good tests, they can catch breakages by running their test suite on the updated dependencies before rolling them out.

The value of writing tests has been extolled endlessly; I am writing here to inform you of the costs of writing bad tests. As you can tell from this example, a broken test does not mean a broken application. Perhaps the test is trying to avoid accidentally leaking new fields to the client (over JSON serialization perhaps), but more often than not, tests overspecify their expectations. This makes my job more difficult. The most (somberingly) accurate description of my job as a maintainer is: I fight bad tests.

Lessons from Fighting Bad Tests

Here are specific patterns in Go that have hindered me in improving libraries.

- Please don’t use

reflect.DeepEqual. If you do this, I can’t add fields. Usingreflect.DeepEqualtends to overspecify which fields are significant for the test, at the sake of some momentary convenience while writing. The go-cmp package was designed to avoid this problem. - Please don’t use

encoding/gob. Especially on structs you don’t own. Again, I can’t add (or reorder) fields. There are better alternatives out there, like JSON or protobufs. I have seen this cause breakages in the wild because a library I was working on reordered fields and caused a service to start issuing different pagination tokens. It happens. - Please don’t check

Error(). … But when you do, please usestrings.Contains. I can’t add new error details that give more context. If you compare on the exact error string, then any new (or removed) bytes from that string will cause a failure. - Write structs, not interfaces. If there’s only one implementation of a method call, then there’s only one codepath that has to be reasoned about. Only add abstraction when you need it.

- Report bugs. If a library has a bug, report it to the upstream author. If we can’t see a problem, we can’t fix it.

Why does this happen?

Taking a step back, why do bad tests happen? What is the common thread that connects these issues? The problem here is that making seemingly miniscule changes in a library is causing large problems in an application. This is captured much more eloquently by Hyrum Wright, a former Google software engineer:

Given enough use, there is no such thing as a private implementation. That is, if an interface has enough consumers, they will collectively depend on every aspect of the implementation, intentionally or not. This effect serves to constrain changes to the implementation, which must now conform to both the explicitly documented interface, as well as the implicit interface captured by usage. We often refer to this phenomenon as “bug-for-bug compatibility.”

(Emphasis mine, original here.)

The takeaway for your next new shiny application is that to avoid churn whenever you update, follow these rules:

- Only depend on the documented interface where possible. If you want specific behavior, ask for it to be documented.

- Only test what you need. No test is better than a brittle test.

- Use fakes, not mocks. If a part is hard to use under test, then either write a fake or redesign your part.

(It’s worth noting that all of the examples are phrased in terms of tests, but if you’re depending on implicit behavior in production, REALLY rethink your usage.)